The Invisible Transformation of Modern Warfare and Artificial Intelligence is no longer confined to tech labs, startup pitches, or public-facing chatbots. While the world debates AI ethics, copyright disputes, and job disruption, a quieter and far more consequential transformation is unfolding behind reinforced doors and air-gapped servers.

Across the globe, militaries are deploying artificial intelligence on classified network systems disconnected from the public internet, shielded from scrutiny, and embedded deep inside national defense infrastructures.

These are not experimental chatbots. They are battlefield decision aids, logistics optimizers, drone coordinators, cyber defense engines, and war-gaming simulators. And most of them operate far from public view.

The next AI arms race is not happening on social media. It is happening on secret networks.

Contents

- 1 The Shift from Open AI to Secured Military AI

- 2 The New Military AI Stack

- 3 A Global Pattern, Not a Single Power

- 4 Autonomous Systems: The Most Visible Layer

- 5 War-Gaming in the Age of Machine Simulation

- 6 Cyber-AI Convergence

- 7 The Black Box Problem

- 8 The Chip Supply Factor

- 9 Ethical Tension: Automation vs. Human Control

- 10 The Classified Networks Themselves

- 11 Strategic Stability in an AI Era

- 12 What Remains Classified

- 13 The Silent Arms Race

- 14 The Long-Term Question

The Shift from Open AI to Secured Military AI

Most civilian AI systems rely on cloud infrastructure, public data pipelines, and large-scale internet connectivity. Military AI systems operate differently.

Defense agencies are building:

- Air-gapped large language models

- Secure battlefield analytics engines

- Classified computer vision systems

- Encrypted AI-enabled drone control systems

- Autonomous threat detection platforms

These systems run on classified networks such as military intranets, secured satellite links, and compartmentalized defense clouds. The goal is simple: retain AI’s computational power without exposing it to adversarial intrusion. But the consequence is profound. The most powerful AI systems may never be visible to the public.

The New Military AI Stack

Modern military AI development is layered. It typically includes:

1. Data Collection

- Satellite imagery

- Drone feeds

- Signals intelligence

- Cyber telemetry

- Logistics and supply chain movement

- Biometric and battlefield sensor data

2. Processing on Secure Infrastructure

- Defense-specific supercomputers

- Hardened data centers

- Classified cloud environments

- Export controls restrict dedicated AI accelerators

3. Application Layers

- Target identification assistance

- Predictive logistics

- Strategic war-gaming

- Cyber intrusion detection

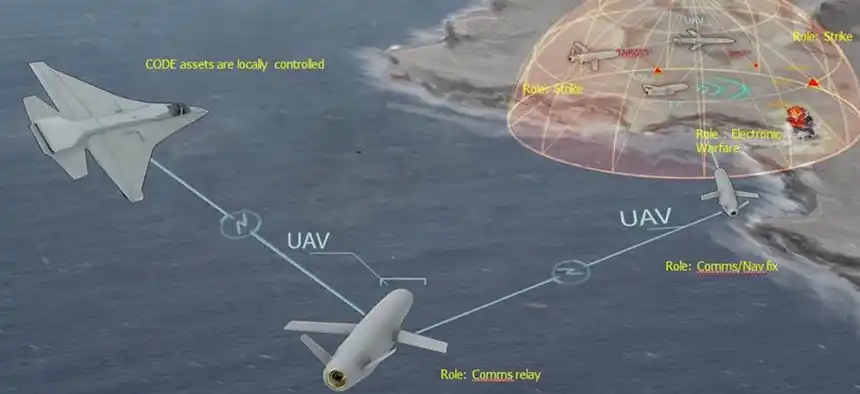

- Autonomous vehicle coordination

- Real-time battlefield mapping

Each layer increases automation in decision cycles that were once entirely human-driven. The key difference from public AI systems is this: military AI is optimized for speed, signal dominance, and strategic advantage, not user interaction.

A Global Pattern, Not a Single Power

This transformation is not limited to one country.

- The United States has integrated AI into defense research agencies and battlefield analytics initiatives.

- China has openly declared “intelligentized warfare” a strategic objective.

- Russia has emphasized autonomous systems and AI-enabled military robotics.

- Israel has developed AI-assisted targeting and drone coordination technologies.

- India, the United Kingdom, France, and NATO members are accelerating defense AI research.

- Middle Eastern states are investing heavily in AI-enabled surveillance and unmanned systems.

The pattern is clear: military advantage is increasingly tied to AI capability. This is not merely modernization. It is strategic repositioning.

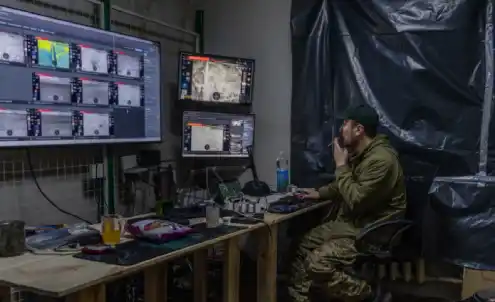

Autonomous Systems: The Most Visible Layer

The most publicly visible aspect of military AI is autonomous or semi-autonomous systems:

- AI-guided drones

- Loitering munitions

- Robotic ground vehicles

- Maritime autonomous vessels

- AI-assisted missile defense systems

But what is less discussed is the decision-support layer behind them. Many of these systems are not fully autonomous. Instead, they rely on AI-generated recommendations presented to human operators. This creates a new battlefield dynamic: human judgment accelerated, and potentially pressured by algorithmic suggestions. When decisions must be made in seconds, the influence of AI becomes structurally embedded.

War-Gaming in the Age of Machine Simulation

Another largely unseen development is AI-driven war simulation. Militaries now use advanced machine learning systems to:

- Run millions of simulated conflict scenarios

- Predict adversary behavior

- Optimize force deployment

- Model supply chain resilience

- Test escalation responses

These simulations can generate strategic insights faster than traditional military exercises. Yet the models themselves, their assumptions, biases, and failure modes, are classified. The public rarely knows what scenarios are being modeled or what conclusions are being drawn.

Cyber-AI Convergence

The intersection of artificial intelligence and cyber warfare may be the most destabilizing domain. AI is being used to:

- Detect cyber intrusions in real time

- Identify anomalous network behavior

- Automate defensive patching

- Predict attack vectors

- Simulate offensive penetration strategies

At the same time, adversaries are developing AI-assisted cyber tools capable of:

- Generating adaptive malware

- Automating phishing campaigns

- Exploiting vulnerabilities at scale

Because these systems operate within classified environments, very little detail is publicly disclosed. What remains unclear is the extent to which AI is being used offensively versus defensively.

The Black Box Problem

AI systems, especially large neural networks, are often opaque. In civilian use, this creates concerns about bias and accountability. In military use, it raises deeper questions:

- How are targeting recommendations validated?

- What safeguards exist against false positives?

- How are errors audited in classified environments?

- Who is ultimately accountable if AI-informed decisions cause unintended escalation?

The answers are often hidden within classified doctrine and restricted oversight frameworks. Secrecy protects operational advantage, but it also limits public debate.

The Chip Supply Factor

Another dimension of the AI arms race is semiconductor control. Advanced AI models require high-performance chips. Governments are increasingly:

- Restricting exports of advanced AI processors

- Subsidizing domestic semiconductor production

- Building national AI supercomputing clusters

- Creating strategic stockpiles of critical hardware

The supply chain for AI computation is now a geopolitical battleground. Military AI capability depends not only on algorithms but on silicon.

Ethical Tension: Automation vs. Human Control

Global discussions about lethal autonomous weapons continue in international forums. Yet many systems deployed today exist in a gray zone:

- Human-in-the-loop systems

- Human-on-the-loop systems

- Human-out-of-the-loop systems (in limited contexts)

The shift is incremental rather than abrupt. Instead of a dramatic leap to fully autonomous warfare, militaries are gradually increasing AI’s role in sensing, analysis, and recommendation. Each incremental increase may appear minor. Collectively, they represent structural transformation.

The Classified Networks Themselves

Much of this AI development occurs on networks invisible to the public internet:

- Military intranets

- Compartmentalized defense clouds

- Encrypted battlefield mesh systems

- Satellite-linked secure communication grids

These networks are intentionally isolated. Air-gapped AI systems prevent external interference, but they also prevent external oversight. This dual-use nature of secrecy is central to understanding the AI arms race.

Strategic Stability in an AI Era

AI accelerates decision-making. In military contexts, faster decision cycles can mean:

- Rapid threat detection

- Accelerated retaliation calculations

- Compressed escalation timelines

Strategic stability historically relied on communication, signaling, and time for deliberation. If AI shortens those timelines, crisis dynamics could change. The question is not whether AI will make war more likely. It is whether it will make mistakes propagate faster.

What Remains Classified

While public statements highlight defensive applications, several areas remain largely undisclosed:

- Offensive AI integration frameworks

- Target prioritization algorithms

- Real-time battlefield AI performance data

- AI system failure case studies

- Autonomous weapons testing evaluations

- Cross-border military AI collaboration agreements

Secrecy is expected in defense. But the scope of AI integration suggests that transparency debates may intensify in the coming years.

The Silent Arms Race

Unlike nuclear weapons, AI does not require visible test explosions. Unlike traditional arms buildup, AI progress can occur within server racks and research labs. The AI arms race is silent, incremental, and computational. Its milestones are measured not in missile counts but in model accuracy, processing speed, and decision latency. And much of it unfolds beyond public awareness.

The Long-Term Question

Artificial intelligence is becoming foundational infrastructure for modern defense systems. The critical question is not whether militaries will use AI; they already are. The deeper question is how the balance between secrecy, accountability, speed, and control will evolve as AI systems become more embedded in national security architectures. Because once AI is integrated into classified networks, it does not easily return to public oversight. The future of military power may not be defined by visible arsenals, but by invisible algorithms running on networks the public will never see.